Not long ago, I always wanted to write myself a useful tool on Github. And that is to do with finding how do we web-scrape data from any websites or web pages.

And sure enough as soon as I opened up the Github page, and go search for web scrap you get the following:

There are about 8800+ search results about this topic. And it sounds like it’s an expansive topic to know for such a simple software that goes out and extract all the data across any sites you encountered.

So I ask myself this question is - how and where did all this web scraping begin?

Well.

As it turns out, I went ahead to dig up the little history behind it.

Since the dawn of the Internet which happened some 25 years ago, people in those days were very fascinated in knowing how can you do business with customers on the other side of the world without any physical restrictions. And as soon as more and more people jump on the internet bandwagon, lots and lots of people slowly starting to build the business profile online thus they start to offer products and services to online visitors.

As soon as the wealth of information builds up all over the internet, several business people starting to take notice of them; wanting to grab this golden online business opportunity not long after. This information could include anything from

- Price comparison data from 3rd party services

- Social media and contact outreach

- Company reviews and online reputation info

- News and content aggregation

- SEO search results aggregation

- Aggregate data from multiple job boards

In order for businesses to get this data, they had to manually copy text from HTML pages. But later proven to be extremely inefficient for business applications.

So they moved on to using spreadsheet software to store web results, where a lot of HTML <table> tags are used to store data thus they were the perfect candidate for further web scraping.

Later on, the web continues to evolve and we looked into using tools to download the server content on your machine and saved them on a client machine like we still used to today.

Soon, later on, web scraping tools started to get more mature and sophisticated at the end of Internet’s second decade, such they’re given amazing capabilities to scan and automatically sniff all HTML’s content for every website out there; big and small.

Even more so, now that we get more modern web scraping tools whose ability to scale and grow in size along with such raw computing power, even along the side of AI that can drive web extraction lot quicker.

Having said all this, this brings me to my next fun exciting point…

We get to build our very own web scrapping tool!

Whilst I was researching this topic on Github, I also stumbled the following.

According to my Github search results, I found Python is the more popular choice of language that does this job very well, compared others in the open source community.

And as a polyglot engineer myself, I thought it would be good to keep an open mind on how other languages can achieve web scraping objectives such as PHP, Ruby, NodeJs, Golang etc.

But after taking a brief glance at other language implementations of the same service, I led myself to a conclusion that I think Python does a much better job than others due to simply two reasons

- The depth and breadth of the history of automating web scraping services.

- Due to reason 1, it’s proven web scraping frameworks and libraries are more solid and comes with a wealth of information that how it handles other tricky scenarios of data web extraction which other languages can’t do.

Thus, I’ll use my Python for this specific project.

In my snippets, I identified a couple of excellent Python web scraping libraries for this project.

- BeautifulSoup

- Scrapy

You can use either one of the libraries to fulfil your web scraping needs or both if you like to be a bit more adventurous. The key difference between the two is; BeautifulSoup is primarily an HTML content parsing library where you fetch some statically-written HTML content page from some specific URL and you go ahead parse certain DOM elements and/attributes you’re particularly interested in extracting; whereas Scrapy is a major framework built for extensive web scraping scenarios that BeautifulSoup could not handle. This is especially true if you desire high levels of performance when intensifying deeper web scraping needs along with multiple URLs to crawl and fetch. In short, Scrapy can crawl the domain level websites and fetch any nested URLs under the same domain URL where BeautifulSoup can’t do.

To clearly understand this difference, let’s start with some code examples below.

BeautifulSoup

Let’s say I want to web-scrape data on a list of available software developer jobs in Sydney from some reputable job board site that I may (or may not) be interested in applying. My need for doing this exercise is I would like to find ways how to effectively and efficiently track any job applications I recently made (or have not made) for certain positions at any time of the day or week. I may not have the time to check for all jobs ads online and learn about their details by logging into my browser and look at them individually. Therefore I want to find a way to automating this step hence the perfect motivation to do some heavy web-scraping tasks.

To start, you import the following libraries.

Import appropriate librariesfrom urllib.request import urlopen

from bs4 import BeautifulSoup

import webbrowser

Define our base URL.

Set up our base URLbase_url = "https://www.seek.com.au/software-developer-jobs/in-All-Sydney-NSW"

Then query the same URL and save its HTML content to a BeautifulSoup object instance.

Query url and instantiate BeautifulSoup objectpage = urlopen(base_url) soup = BeautifulSoup(page)

We prettify the content as we want the content to be legible to read before writing it back to our own static HTML file

Import appropriate librarieshtml_str = soup.prettify()

Write the contents back to our to local file.

Import appropriate librarieshtml_file = open('base_url.html', 'w')

html_file.write(html_str)

html_file.close()

And we’re done! Then you can view the contents of the newly written file by locating the saved file and opening it.

Or you can also programmatically open it as well as below. It achieves the same thing.

Open up the newly written HTML filetry:

file_name = “../../base_url.html"

webbrowser.open_new_tab(file_name)

except:

print("Cannot open local file: {0}".format(file_name))

Now all that is nice and dandy and taken care off, we got right into the ‘meatier’ side of things, which matters the most.

That is we want BeautifulSoup to do the major scraping work for us!

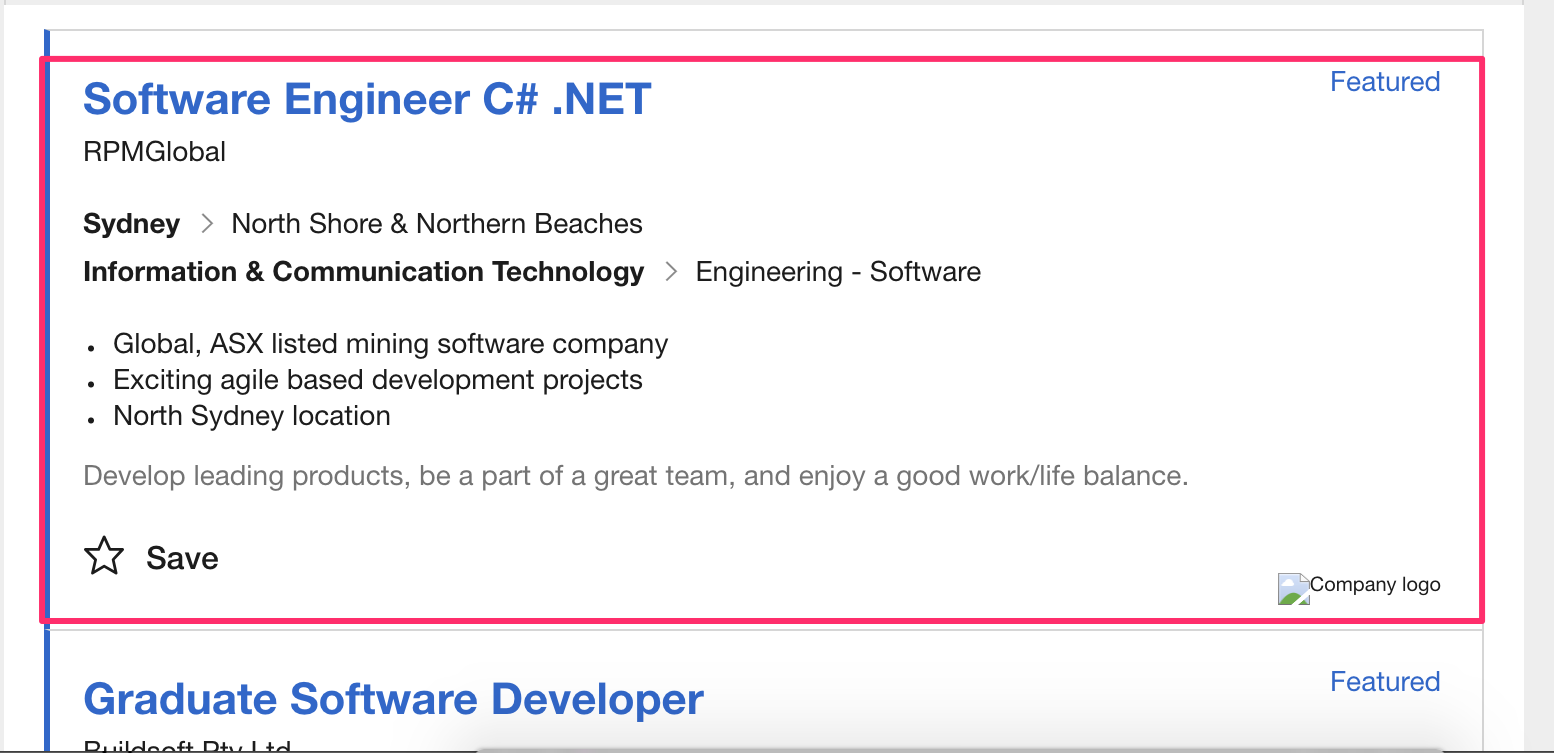

If you open up the base_url.html file, you’ll see the following:

From this screenshot, I know what my data extraction needs are going to be.

Let’s say, for simple requirements, as a professional software developer, I’m interested to find all software developer roles that are currently available in Sydney job market right now via Seek. Based on the screenshot, I would like to obtain the following information:

- Job title

- Company name/Recruitment agency name

- City location

- Suburb area

- Salary range (if any)

- Role specification

- Job description

Every job role advertised I noticed on the same page would have the exact attributes as compared to the original screenshot. Thus, with that assumption, I will tell BeautifulSoup to analyse and parse the list of jobs based on the previously-mentioned requirements.

If you open up the HTML content, you identify the HTML elements like below.

HTML auto-gengerated by BeautifulSoup<html>

<body>

...

<article aria-label="Software Engineer C# .NET" data-automation="premiumJob" data-job-id="36225685">

...

<a data-automation="jobCompany" title="Jobs at RPMGlobal">

RPMGlobal

</a>

...

<a data-automation="jobLocation" >

Sydney

</a>

...

<a data-automation="jobArea" >

North Shore & Northern Beaches

</a>

<ul>

<li>

Global, ASX listed mining software company

</li>

<li>

Exciting agile based development projects

</li>

<li>

North Sydney location

</li>

</ul>

<span data-automation="jobShortDescription">

Develop leading products, be a part of a great team, and enjoy a good work/life balance.

</span>

</article>

</body>

<html>

And what you notice that there are some noticeable patterns of data attributes that you can use here.

First, we fetch all article tags in the list.

Grab all article tagsjob_articles = soup.find_all("article")

After fetching all article tags, we want to perform a loop operation over this list to extract some data for each job.

Loop through each job article and do something with themfor a_job_article in job_articles:

extract_details(a_job_article)

And here’s our implemented extract_details method

Let's get extractin'!def extract_details(a_job_article):

# Job title

print(a_job_article["aria-label"])

# Company

company = a_job_article.find(attrs={"data-automation":"jobCompany"})

if company != None:

print(company.text)

# Location

location = a_job_article.find(attrs={"data-automation":"jobLocation"})

if location != None:

print(location.text)

# Area

area = a_job_article.find(attrs={"data-automation":"jobArea"})

if area != None:

print(area.text)

# Salary range

salary = a_job_article.find(attrs={"data-automation":"jobSalary"})

if salary != None:

print(salary.text)

# Duties and tasks(optional)

if a_job_article.find('ul') != None:

dutiestasks_list = a_job_article.find('ul').find_all('li')

list_of_dutiestasks = []

if dutiestasks_list != None:

for dutiestasks_item in dutiestasks_list:

if dutiestasks_item != None:

print(dutiestasks_item.text)

# Job Description

job_description = a_job_article.find(attrs={"data-automation":"jobShortDescription"})

if job_description != None:

print(job_description.text)

In spite of being a ‘sizeable’ method, it’s easy to read and comprehend clearly what my data extraction requirements are going to be.

I set out to find all the relevant data I want by telling BeautifulSoup I’m searching for specific elements that come with certain HTML attributes such as data-automation.. I accomplished this by calling BeautifulSoup’s core API methods find. This find method does the core job in scanning the HTML elements that match whatever the predicate you specify for a complete match. Obviously simple tag elements like ul, li, span can be used. For our case, our tag matching needs are slightly more complex so we need an extra parameter for our predicate to achieve our main data extraction goals here.

What you also notice too is that I placed a number of conditional checks in case we encountered any HTML elements that are not found, we don’t want our scraping tool to crash and burn, thus not able to continue scanning until the end of the HTML document. This is expected when doing our web scraping needs that we can’t always best assumed all the data is available all the time.

To begin web-scraping, you run the following:

Command Outputpython py-webby-to-scrapy.py

You will then see the following lines produced:

Command OutputSoftware Engineer C# .NET RPMGlobal Sydney North Shore & Northern Beaches Global, ASX listed mining software company Exciting agile based development projects North Sydney location Developing leading products, be part of a great team, and enjoy a good work/life balance

Now that I know that all the data fetched is all working fine as expected.

Next important bit is to extract them and have them populated into a CSV file.

To do this, you write up the following:

Create our CVS writer programatticallywith open('bs_job_searches.csv', 'a') as csv_file:

# Empty file contents first

csv_file.seek(0)

csv_file.truncate()

# Setup CSV headings for the CSV stream writer

fieldnames = ['job_title', 'company', 'location', 'area', 'salary_range', 'role_specification', 'job_description']

csv_writer = csv.DictWriter(csv_file, fieldnames=fieldnames)

csv_writer.writeheader()

job_articles = fetch_articles()

# Let the data extraction commence by passing the list and the csv stream writer

for a_job_article in job_articles:

extract_details(a_job_article, csv_writer)

Going over the extract_details method, the change is fairly straightforward. We just simply replace the print statements with a dictionary that grabs our data and assign to its appropriate key. Like so.

Extracting data to CSV this time!def extract_details(a_job_article, csv_writer=None):

# create the dictionary to store all data to their relevant fields

job_article_dict = {}

# Job title

job_article_dict["job_title"] = a_job_article["aria-label"]

# Company

company = a_job_article.find(attrs={"data-automation":"jobCompany"})

if company != None:

job_article_dict["company"] = company.text

# Location

location = a_job_article.find(attrs={"data-automation":"jobLocation"})

if location != None:

job_article_dict["location"] = location.text

# Area

area = a_job_article.find(attrs={"data-automation":"jobArea"})

if area != None:

job_article_dict["area"] = area.text

# Salary range

salary = a_job_article.find(attrs={"data-automation":"jobSalary"})

if salary != None:

job_article_dict["salary_range"] = salary.text

# Duties and tasks(optional)

if a_job_article.find('ul') != None:

dutiestasks_list = a_job_article.find('ul').find_all('li')

list_of_dutiestasks = []

if dutiestasks_list != None:

for dutiestasks_item in dutiestasks_list:

if dutiestasks_item != None:

list_of_dutiestasks.append(dutiestasks_item.text)

job_article_dict["role_specification"] = ';'.join(list_of_dutiestasks)

else:

job_article_dict["role_specification"] = ""

# Job Description

job_description = a_job_article.find(attrs={"data-automation":"jobShortDescription"})

if job_description != None:

job_article_dict["job_description"] = job_description.text

# Finally write them to the csv file

csv_writer.writerow(job_article_dict)

That’s it with BeautifulSoup!

It does a pretty good job for our simple data extraction needs.

However, it does not come without any limitations. As it is only an HTML parsing tool, it cannot perform other sophisticated web scraping needs as you navigate the site further.

What if the site you’re currently on has:

- pagination links to navigate other items in a growing list.

- some details pages from the list of links on the same page that you want to navigate and grab data

- infinite scrolling pages whose content are AJAX-ified?

How do we suppose to web-scrape in such situations?

Obviously, BeautifulSoup doesn’t have the ability to crawl or sniff the site/s we want.

Therefore, in such cases where BeautifulSoup can’t do, that’s where Scrapy comes in and helps us!

So lets’ get straight to our code sample!

Scrapy

As Scrapy is a fully-fledged web crawling framework, you have to get used to understanding its CLI commands and how their work before doing any sort of web scraping work.

To start it off, you open up your terminal window.

To create a new project, run scrapy startproject jobsearchscraper

Startup Scrapy projectscrapy startproject jobsearchscraper

After running it, you will see the following folder structure:

Typical Scrapy folder structurejobsearchscraper/ scrapy.cfg jobsearcscraper/ __init__.py items.py middlewares.py pipelines.py settings.py spiders/ _init__.py

This is what it comes with when setting up Scrapy project. Like any framework, it comes with its own set of rules and system configurations, followed by pipelines and middlewares that you can leverage in building up your app.

The most important folder we should pay full attention is the spiders folder.

Analogous to a creepy-crawler spider, it is there to crawl all over the place in order to spread its invasion or territory gain by strewing lots of cobwebs. By doing this, they gain full awareness of their surroundings and be able to find and catch prey, wherever they may be. With that mind, in relation to this framework, this is precisely what web spiders are built for. It will use the Spider class to crawl a website (or a group of websites) so we can gather all the necessary information about them such making initial requests, knowing which links should we follow etc before we go ahead to do some serious web scraping fun.

Go to spiders folder and create a file called job_search_spider.py, import scrapy.

Import our libraryimport scrapy

Following from the previous web-scraping exercise to extract all latest software developer jobs, we start off defining our variables Scrapy requires in our Spider class.

Our very first Scrapy fileclass JobSearchSpider(scrapy.spider):

name = "job_searches"

allowed_domains = ["seek.com.au"]

start_urls = ["https://www.seek.com.au/software-developer-jobs/in-All-Sydney-NSW"]

With the above, what we’re saying here is we have given a unique name for our Scrapy project called job_searches whenever we want to perform web requests in preparation for web scraping. We also defined which domain URLs this project is allowed to web scrap only, and we list any specific URLs in the start_urls array for Scrapy to begin crawling. So in short, when you see this code setup, this suggests we can only do a web crawl for one specific URL link that falls under the root domain URL for this job_searches web project, one execution at a time.

Now, we got that out of the way, let’s move on.

With our BeautifulSoup exercise earlier, we used find method calls to scan for elements that contain our data.

In Scrapy, however, we now do the following:

Crawling and web-scraping again - using Scrapy this timedef parse(self, response):

self.log('Browsing ' + response.url)

job_articles = response.css('article')

for job in job_articles:

# Initialize our dictionary

job_article_dict = {}

# fetch our duties and tasks in the li tags

list_of_dutiestasks = []

duties_list = job.css('ul li')

for each_duty in duties_list:

list_of_dutiestasks.append(each_duty.css('span[data-automation="false"]::text').extract_first())

# fetch our data elements

job_article_dict['job_title'] = job.css('article::attr(aria-label)').extract_first()

job_article_dict['company'] = job.css('a[data-automation="jobCompany"]::text').extract_first()

job_article_dict['location'] = job.css('a[data-automation="jobLocation"]::text').extract_first()

job_article_dict['area'] = job.css('a[data-automation="jobArea"]::text').extract_first()

job_article_dict['salary_range'] = job.css('span[data-automation="jobSalary"] span::text').extract_first()

job_article_dict['role_specification'] = ';'.join(list_of_dutiestasks),

job_article_dict['job_description'] = job.css('span[data-automation="jobShortDescription"] span[data-automation="false"]::text').extract_first()

yield job_article_dict

Comparing to our BeautifulSoup exercise, structurally, it looks the same ie the loop to go through all the HTML elements that hold job article information, our item dictionary etc. The only difference is we replace find method using a CSS selector API .css() to find and match the HTML elements we want. Thus if you’re coming from a front-end development background and you know your CSS well, this is second nature to you. You’ll make use your CSS specificity knowledge to your advantage to do this quickly. Otherwise, for those who aren’t, you can use the xpath() option, which is the path expression tool for navigating and identifying nodes anywhere in an HTML/XML document if you prefer.

Notice, at the end of each job_article iteration, we yield our job article dictionary data within our console environment as Scrapy makes heavy use of generators when dealing with an ever-growing list of HTML content pages/sites the program is going to crawl.

This is useful for more memory-efficient and faster crawling performance when scraping data linearly/exponentially.

Now.

Here comes the fun part!

We’re going through pagination links to continue fetching more data!!

To do this, just as we’re about to exit its for loop statement, we inject the following code

Pagination time!next_page_url = response.css('a[data-automation="page-next"]::attr(href)').extract_first()

self.log("Next page url to navigate: " + next_page_url)

if next_page_url:

next_page_url = response.urljoin(next_page_url)

yield scrapy.Request(url=next_page_url, callback=self.parse)

That’s it!

This is how incredibly easy it is to set up.

To understand this, firstly, let’s revisit the pagination HTML markup.

Pagination HTML markup<div>

<p>

<span class="_2UKqRah _2454KzL">1</span>

<span class=""><a href="/software-developer-jobs/in-All-Sydney-NSW?page=2" rel="nofollow" class="_2UKqRah" data-automation="page-2" target="_self">2</a></span>

<span class=""><a href="/software-developer-jobs/in-All-Sydney-NSW?page=3" rel="nofollow" class="_2UKqRah" data-automation="page-3" target="_self">3</a></span>

<span class="K1Fdmkw"><a href="/software-developer-jobs/in-All-Sydney-NSW?page=4" rel="nofollow" class="_2UKqRah" data-automation="page-4" target="_self">4</a></span>

<span class="K1Fdmkw"><a href="/software-developer-jobs/in-All-Sydney-NSW?page=5" rel="nofollow" class="_2UKqRah" data-automation="page-5" target="_self">5</a></span>

<span class="K1Fdmkw"><a href="/software-developer-jobs/in-All-Sydney-NSW?page=6" rel="nofollow" class="_2UKqRah" data-automation="page-6" target="_self">6</a></span>

<span class="K1Fdmkw"><a href="/software-developer-jobs/in-All-Sydney-NSW?page=7" rel="nofollow" class="_2UKqRah" data-automation="page-7" target="_self">7</a></span>

<a href="/software-developer-jobs/in-All-Sydney-NSW?page=2" rel="nofollow next" class="_1XIONbW" data-automation="page-next" target="_self">Next</a>

</p>

</div>

There’s little need for me to explain what this markup is about. You can obviously see this is a typical pattern for pagination links. Thus for each paginated link, it contains its own a href link tag.

What we’re interested here is to get a href link with a text which says Next.

That’s our guy.

So how do we know this href link stands out differently compared to the rest of the hyperlinks? The answer is right in front of us.

There’s the data-automation attribute who says ‘page-next’. Since it’s the only unique attribute value in that entire markup, (as well as the entire page), we can safely assume that’s the correct one we’re interested to fetch. Hence our CSS query will be written as follows

Getting the right pagination queryresponse.css('a[data-automation="page-next"]::attr(href)').extract_first()

We say, on the same page, fetch this specific element whose data-automation attribute is set as ‘page-next’ and grab its actual href link value at the same time. Doing this css call will give us an instance of a Node HTML object of href link type. We then must explicitly extract its actual href link so we can begin our page request with it.

So as long as the fetched pagination URL is valid ie if next_page_url: , we obtain its actual absolute URL path by calling response.urljoin(next_page_url) as the returned next_page_url is a relative URL path, to begin with. Then we make Scrapy to initialize the web request for the paginated link and yield it until the request has been fulfilled from the server. We send a callback method to perform some operation after the request for the paginated link is fulfilled and an expected page content is returned, therefore we pass the flow control back to the parse method as we may have other job article items in the list we wish to continue parsing/extracting. Once we’re done with parsing/extracting the second time, we go to the next page and start the parsing callback again. We keep this repeating process until either we reached the end of the list or we encountered an unreachable page content, so we get the extracted data we got so far.

With that, we run the following command:

Let the drums roll!!scrapy runspider ./jobsearchscaper/spiders/job_search_spider.py

At the console screen, Scrapy will report to you how many items have been extracted

Report of items being web-scraped2018-06-10 16:42:44 [scrapy.core.engine] INFO: Closing spider (finished)

2018-06-10 16:42:44 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 12302,

..........

'item_scraped_count': 513,

..........

'start_time': datetime.datetime(2018, 6, 10, 6, 42, 25, 472517)}

2018-06-10 16:42:44 [scrapy.core.engine] INFO: Spider closed (finished)

And that’s it!

That’s all there is knowing Scrapy!

Scrapy is an awesome framework for tackling all the other web browsing scenarios that Beautiful Soup can’t do, especially true for dynamically generated content such as paginated links or infinite scrolling pages or pages with details content links.

Which is great… but you may ask how does Scrapy handle CSV data export like we did with BeautifulSoup earlier? How does it fare better?

Well.

Glad you asked. This is even much easier to accomplish!

To achieve this, you run the following commands in terminal screen:

Magic happens!!scrapy crawl job_searches -o job_searches.csv

Voila!

All done and dusted!!

Find the CSV file you just exported, and you will find all the column headers and rows are exported correctly and beautifully.

No need to write any parsing and reading/writing to files code programmatically.

No need to import any data export libraries or plugins.

Just a few simple CLI tools and away you go!

Scrapy internally includes all the most common data exports formats you can imagine from XML, CSV, JSON etc. It comes baked-in with several useful middlewares that not only help you to extract data but also lets you take advantage of serialization techniques as well as backend storage systems as well. Thus you can do many more advanced data exports functionality, so should you desire. You can read more about it here.

Concluding Thoughts

There you have it.

Those are just the core basics of using Python’s two infamous web scraping tools.

This is, by far, no means a complete user guide of getting the most of them. You can do everything with them. My simple web scraping example is too simplistic to explore all the possible features BeautifulSoup and Scrapy can do. Moreover, they are way out of scope for the intents and purposes of this post so I will stop it here.

The only limitations I’d find with them are as these tools are backend tools, they cannot scrap web content that’s heavily powered by some JS framework such as Angular/React/VueJS as JS is, after all, the language and tool for the front-end. You need to integrate different plugin called Splash, which is a Python library that lets you inject your custom JS library code in order to interact with front-end UI rendering logic. Interestingly, this plugin comes with an embedded Lua language in order to make script injection work so expect to get yourself familiarise with Lua beforehand.

I will have to cover them in my future posts, should I get into a deeper relationship in exploring their use cases.

While it is’ great having fun playing around them, however I also must stress the caveats in using them.

As I would picture-quote:

This quote comes from the infamous fictitious uncle, named Ben, of a fictional web-slinging hero, Spiderman, who forewarned him the dangers of using his super powers if not using them correctly ( or morally, should I say). If you do something wrong with it, there will be repercussions down the line. Sure enough, the author died sadly due to our hero’s negligence of his moral ethics for letting a criminal getting away. In the end, the hero felt horrible for his actions thus he learned the hard way in using his powers for greater good, not misusing it. He will forever live up to his moral integrity thereafter.

Ironically, this similarly applies to dev ‘heroes’ who wants to crawl websites may also be faced with similar ethical questions? Will they be web-scraping for the right reasons? Or will they be web-scraping to steal and copy data for their bad intentions and purposes? Who will win or who will lose in this process? Who will suffer and hurt the most? Is it legal to do so? If not, do you have the legal consent to do so? Does it only encourage more business anti-competitiveness etc, etc?

All those looming ethical questions that will haunt developers for days and days.

Like with any languages/frameworks/libraries have their own ‘superpowers’ to do great things, it’s up to developers to take grave responsibility in how we’re planning to use them. Are we using them for the greater good? Or are we slipping into the dark side of its limitless power?

We should tread on this subject matter carefully, and seriously question ourselves whether will there be another business or person that could be negatively impacted by this act? Will they end up like Uncle Ben, who suffered in vain? Or will they be saved from harm because they know the difference between the rights and wrongs of powerful web-scraping?

Hence, we must define our web scraping needs with a purpose.

A purposeful web scraping that comes with great care (and responsibility).

Think about how many Uncle Bens out there you could save and not land yourself in your legal (and moral) hot waters unnecessarily.

You can read more about this senstive subject here, and here, and here, and here, etc.

Till next time, Happy (and ethical) Coding!

PS: You can find my sample code on my Github account here, if you like to know more about it.

For more learning resources on web scraping, you can check the links below: