Generated AI image by Microsoft Bing Image Creator

Introduction

If you’re like me, you’ve probably experienced the frustration of developing any AWS applications in the cloud especially such as lambda, cloudfront, S3, etc using tools like SAM, CloudFormation etc.. Now that I’ve been delving into the world of data engineering, AWS Glue jobs is one of the main AWS data engineering tooling offerings, the frustrations in having to push Glue jobs directly in the cloud—waiting for job runs, nitty gritty AWS Glue binaries setup, dealing with slow feedback loops, and watching your AWS bill creep up with every test iteration. I’ve been there, and it’s not fun.

That’s why I built this local development environment. After experimenting with different approaches, I’ve put together a Docker-based setup that lets me develop and test Glue jobs on my laptop before deploying to AWS. The best part? It includes all the real-world components I actually use in actual cloud envs: Kafka for streaming data, Iceberg for my data lake tables, and LocalStack to simulate AWS services.

In this guide, I’ll walk you through my setup step-by-step. Whether you’re working on batch ETL jobs or real-time streaming pipelines, this environment will save you time, money, and a lot of headaches.

What We’re Building

Here’s what I’ve included in my local stack:

- AWS Glue 4.0 - The core ETL engine, just like in production

- JupyterLab - For interactive development and quick experiments

- Confluent Kafka - Because most of my pipelines involve streaming data

- Apache Iceberg - My go-to table format for ACID guarantees and time travel

- LocalStack - To mock S3 and DynamoDB without touching AWS

Before You Start

Make sure you have these installed on your machine:

- Docker Desktop (20.10+) with at least 8GB RAM allocated

- Docker Compose v2.0+

- Basic familiarity with AWS Glue and Spark

My Project Structure

Here’s how I organize my demo projects:

docker-glue-pyspark-demo/

├── docker-compose.yml

├── Dockerfile

├── .env

├── scripts/

│ ├── start-containers.sh

│ └── shutdown-cointainers.sh

├── plain/

│ ├── bronze_job.py

│ ├── silver_job.py

│ └── gold_job.py

├── terraform

│ ├── main.tf

│ └── providers.tf

├── poetry.lock

├── pyproject.toml

│

└── notebooks/Step 1: Docker Compose Setup

Create docker-compose.yml:

services:

glue-pyspark:

build:

context: .

dockerfile: Dockerfile

container_name: glue-pyspark-poc

image: my-glue-pyspark

volumes:

- ./plain:/app/plain

- ./purchase_order:/app/purchase_order

ports:

- "18080:18080"

environment:

AWS_ACCESS_KEY_ID: "test"

AWS_SECRET_ACCESS_KEY: "test"

AWS_REGION: "us-east-1"

stdin_open: true # Keep STDIN open

tty: true

depends_on:

- kafka

- iceberg

- localstack

networks:

- localstack

- kafka-net

jupyterlab:

image: my-glue-pyspark

container_name: jupterlab-poc

environment:

AWS_ACCESS_KEY_ID: "test"

AWS_SECRET_ACCESS_KEY: "test"

AWS_REGION: "us-east-1"

DISABLE_SSL: true

command:

[

"/home/glue_user/jupyter/jupyter_start.sh",

"--ip=0.0.0.0",

"--no-browser",

"--allow-root",

"--NotebookApp.token=test",

"--NotebookApp.allow_origin=*",

"--NotebookApp.base_url=http://localhost:8888",

"--NotebookApp.token_expire_in=36",

]

ports:

- 8888:8888

depends_on:

- glue-pyspark

volumes_from:

- glue-pyspark

networks:

- localstack

- kafka-net

localstack:

image: localstack/localstack

environment:

- SERVICES=dynamodb,s3,iam

- DEFAULT_REGION=us-east-1

- AWS_ACCESS_KEY_ID=test

- AWS_SECRET_ACCESS_KEY=test

- DEBUG=1

ports:

- "4566:4566"

- "4510-4559:4510-4559"

networks:

- localstack

kafka:

image: confluentinc/cp-kafka:7.4.0

ports:

- 29092:29092

- 9092:9092

environment:

KAFKA_BROKER_ID: 1

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka:29092,PLAINTEXT_HOST://localhost:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT,PLAINTEXT_HOST:PLAINTEXT

KAFKA_INTER_BROKER_LISTENER_NAME: PLAINTEXT

KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:29092,PLAINTEXT_HOST://0.0.0.0:9092

depends_on:

- zookeeper

networks:

- kafka-net

zookeeper:

image: confluentinc/cp-zookeeper:latest

ports:

- 2181:2181

environment:

ZOOKEEPER_CLIENT_PORT: 2181

ZOOKEEPER_TICK_TIME: 2000

networks:

- kafka-net

iceberg:

image: tabulario/spark-iceberg:latest

networks:

- kafka-net

networks:

localstack:

driver: bridge

kafka-net:Step 3: Custom Glue Dockerfile

Create Dockerfile:

# https://stackoverflow.com/a/74598849

RUN rm /home/glue_user/spark/conf/hive-site.xml

# Removed as they conflict with structured streaming jars below

RUN rm /home/glue_user/spark/jars/org.apache.commons_commons-pool2-2.6.2.jar

RUN rm /home/glue_user/spark/jars/org.apache.kafka_kafka-clients-2.6.0.jar

RUN rm /home/glue_user/spark/jars/org.spark-project.spark_unused-1.0.0.jar

...

...

...

# Install additional dependencies

# Upgrade pip to the latest version

RUN pip3 install --upgrade pip

# Install Jupyterlab

RUN pip3 install jupyterlab

# Expose Jupyter port

EXPOSE 8888

# Install Poetry for dependency management

RUN pip3 install poetry==1.8.5

COPY pyproject.toml poetry.lock* /app/

COPY plain /app/plain

# Disable Poetry's virtual environment creation, install project dependencies via poetry

RUN poetry install

# Run the container - non-interactive

ENTRYPOINT [ "/bin/bash", "-l", "-c" ]

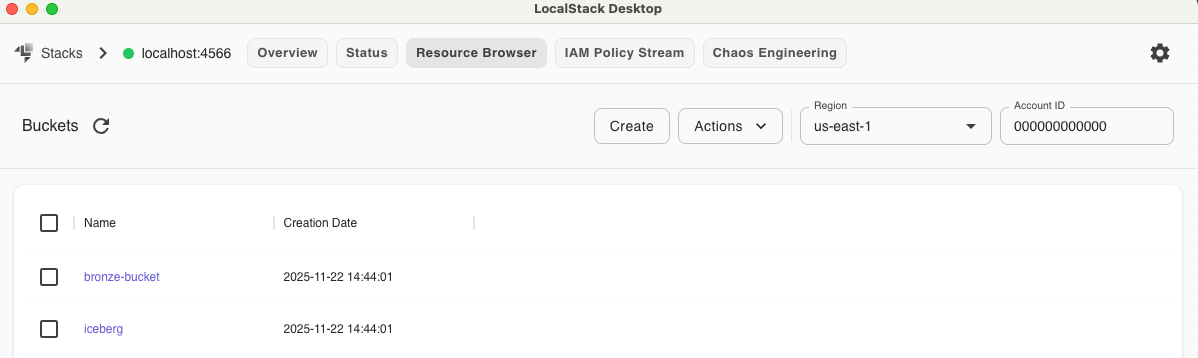

CMD ["/bin/bash"]Step 4: Initialize LocalStack Resources

Navigate to terraform and run tflocal apply --auto-approve:

You notice the following locally emulated AWS services below:

resource "aws_s3_bucket" "bronze-bucket" {

bucket = "bronze-bucket"

acl = "private"

}

resource "aws_s3_bucket" "iceberg" {

bucket = "iceberg"

acl = "private"

}

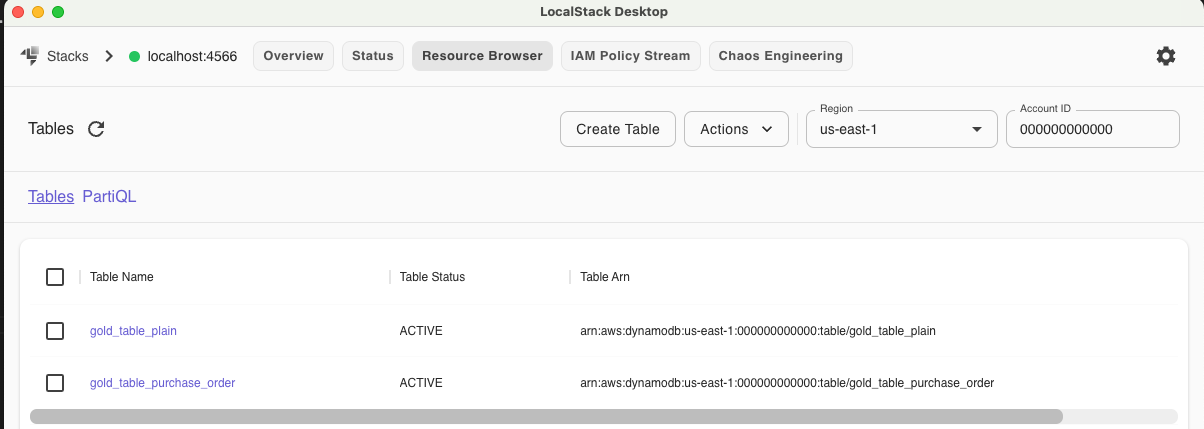

resource "aws_dynamodb_table" "dynamo_db_table_gold_table_plain" {

name = "gold_table_plain"

billing_mode = "PAY_PER_REQUEST"

hash_key = "id"

attribute {

name = "id"

type = "N"

}

}Step 5: Generated Kafka Topics Events for Data ingestion

Create scripts/generate_plain_events.bash:

#!/bin/bash

set -ex # Exit on error, print commands

# Kafka settings

TOPIC="plain-topic"

BOOTSTRAP_SERVERS="kafka:9092"

CONTAINER_NAME="docker_glue_pyspark_demo-kafka-1" # Replace with your actual container name

# Function to generate a random JSON event

generate_event() {

id=$((RANDOM % 100 + 1))

name=$(cat /usr/share/dict/words | shuf -n 1 | awk '{print toupper(substr($0,1,1)) tolower(substr($0,2))}')

amount=$((RANDOM % 201))

echo "{\"id\": $id, \"name\": \"$name\", \"amount\": $amount}"

}

...

...

# Generate and send events for the specified number of iterations

for i in $(seq 1 $iterations); do

event_data=$(generate_event)

echo "Sending event: $event_data"

# Execute the kafka-console-producer command inside the Docker container

docker exec -i "$CONTAINER_NAME" \

kafka-console-producer --topic "$TOPIC" --bootstrap-server "$BOOTSTRAP_SERVERS" \

<<< "$event_data"

echo "Event sent (exit code: $?)"

sleep 1 # Adjust the sleep interval as needed

doneStep 6: Kafka Streaming Job (Bronze Layer)

Create plain/bronze_job.py:

from pyspark.sql import SparkSession

from pyspark.sql.functions import from_json, col

from pyspark.sql.types import StructType, StructField, StringType, IntegerType

import boto3

import time

import json

spark = SparkSession.builder \

.appName("Bronze Layer Job") \

.master("local[*]") \

.config("spark.hadoop.fs.s3a.endpoint", "http://localstack:4566") \

.config("spark.hadoop.fs.s3a.access.key", "test") \

.config("spark.hadoop.fs.s3a.secret.key", "test") \

.config("spark.hadoop.fs.s3a.impl", "org.apache.hadoop.fs.s3a.S3AFileSystem") \

.config("spark.hadoop.fs.s3a.path.style.access", "true") \

.config("spark.hadoop.fs.s3a.multiobjectdelete.enable", "false") \

.config("spark.hadoop.fs.s3a.fast.upload", "true") \

.config("spark.hadoop.fs.s3a.fast.upload.buffer", "disk") \

.config("spark.hadoop.fs.s3a.endpoint.region", "us-east-1") \

.config("spark.hadoop.fs.s3a.aws.credentials.provider", "org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider") \

.getOrCreate()

# # Set S3 endpoint to point to LocalStack

s3_endpoint_url = "http://localstack:4566"

# Kafka broker address and topic

kafka_bootstrap_servers = "kafka:29092"

kafka_topic = "plain-topic"

bucket_name = "bronze-bucket"

output_s3_bucket = f's3a://{bucket_name}/plain'

# Read from Kafka

df = spark \

.readStream \

.format("kafka") \

.option("kafka.bootstrap.servers", kafka_bootstrap_servers) \

.option("subscribe", kafka_topic) \

.option("startingOffsets", "earliest") \

.load()

# Extract the value and parse the JSON

parsed_df = df.selectExpr("CAST(value AS STRING) as value") \

.select(from_json(col("value"), schema).alias("data")) \

.select("data.*")

query = parsed_df.writeStream \

.foreachBatch(process_batch) \

.format("parquet") \

.option("path",output_s3_bucket) \

.option("checkpointLocation", "/tmp/spark_checkpoints/bronze_layer/plain") \

.trigger(processingTime="500 milliseconds") \

.outputMode("append") \

.start()

query.awaitTermination()Step 7: Batch ETL Job - Kafka to Icerberg (Silver Layer)

Create plain/silver_job.py:

from pyspark.sql import SparkSession

bucket_name = "bronze-bucket"

output_s3_bucket = f's3a://{bucket_name}/plain'

s3_endpoint_url = "http://localstack:4566"

namespace_catalog = "local_catalog"

catalog_name = "local_catalog_plain"

table_name = "silver_table"

full_table_name = f"`{namespace_catalog}`.`{catalog_name}`.`{table_name}`"

spark = SparkSession.builder \

.appName("Silver Layer Job") \

.master("local[*]") \

.config("spark.sql.extensions", "org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions") \

.config("spark.sql.catalog.local_catalog", "org.apache.iceberg.spark.SparkCatalog") \

.config("spark.sql.catalog.local_catalog.type", "hadoop") \

.config("spark.sql.catalog.local_catalog.warehouse", "s3a://iceberg/warehouse") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.endpoint", "http://localstack:4566") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.access.key", "test") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.secret.key", "test") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.connection.ssl.enabled", "false") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.path.style.access", "true") \

# Create the database if it doesn't exist using PySpark SQL

create_database_if_not_exists(spark, catalog_name)

# Create the table if it doesn't exist using PySpark SQL

create_table_if_not_exists(spark, namespace_catalog, catalog_name, table_name)

# Read from S3 (Bronze)

bronze_path = output_s3_bucket

df = spark.read.format("parquet").load(bronze_path)

# Perform data cleaning and enrichment

cleaned_df = df.filter("amount < 100")

# Write to Iceberg (Silver)

cleaned_df.writeTo(full_table_name).append()

# Show the Silver Iceberg table records

message = spark.table(full_table_name).show()

spark.stop()Step 8: Batch ETL Job - Iceberg to Dynamo (Gold Layer)

Create plain/gold_job.py:

from pyspark.sql import SparkSession

from botocore.exceptions import ClientError

import time

import json

# # Set S3 endpoint to point to LocalStack

s3_endpoint_url = "http://localstack:4566"

namespace_catalog = "local_catalog"

catalog_name = "local_catalog_plain"

table_name = "silver_table"

full_table_name = f"`{namespace_catalog}`.`{catalog_name}`.`{table_name}`"

# Initialize Spark session

spark = SparkSession.builder \

.appName("Gold Layer Job") \

.master("local[*]") \

.config("spark.sql.extensions", "org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions") \

.config("spark.sql.catalog.local_catalog", "org.apache.iceberg.spark.SparkCatalog") \

.config("spark.sql.catalog.local_catalog.type", "hadoop") \

.config("spark.sql.catalog.local_catalog.warehouse", "s3a://iceberg/warehouse") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.endpoint", "http://localstack:4566") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.access.key", "test") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.secret.key", "test") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.connection.ssl.enabled", "false") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.path.style.access", "true") \

.config("spark.hadoop.fs.s3a.endpoint", "http://localstack:4566") \

.config("spark.hadoop.fs.s3a.access.key", "test") \

.config("spark.hadoop.fs.s3a.secret.key", "test") \

.config("spark.hadoop.fs.s3a.impl", "org.apache.hadoop.fs.s3a.S3AFileSystem") \

.config("spark.hadoop.fs.s3a.multiobjectdelete.enable", "false") \

.config("spark.hadoop.fs.s3a.fast.upload", "true") \

.config("spark.hadoop.fs.s3a.fast.upload.buffer", "disk") \

.config("spark.hadoop.fs.s3a.endpoint.region", "us-east-1") \

.config("spark.hadoop.fs.s3a.path.style.access", "true") \

.config("spark.hadoop.fs.s3a.aws.credentials.provider", "org.apache.hadoop.fs.s3a.SimpleAWSCredentialsProvider") \

.getOrCreate()

# Read from Iceberg (Silver)

df = spark.read.format("iceberg").load(full_table_name)

# Define the table name

dynamo_table_name = "gold_table_plain"

dynamodb = boto3.client("dynamodb", endpoint_url=s3_endpoint_url,region_name="us-east-1")

data = df.collect()

for row in data:

item = {

'id': {'N': str(row['id'])},

'name': {'S': row['name']},

'amount': {'N': str(row['amount'])}

}

dynamodb.put_item(TableName=dynamo_table_name, Item=item)

log_logging_events(f"Inserted {json.dumps(item)} items into {dynamo_table_name}.", logs_client)

print(f"Inserted {len(data)} items into {dynamo_table_name}.")

spark.stop()Step 9: Launch the Environment

Start all services:

Run scripts/start-containers.bash

docker-compose up --build -dStop all resources when done:

Run scripts/shutdown-containers.bash

docker-compose downStep 9: Access JupyterLab

Navigate to http://localhost:8888 and you will see the Jupyter Notebook interface there.

Step 10: Verify the complete Setup

Verify Localstack S3 and DynamoDB resources

Localstack comes with UI Desktop version for devs to navigate and confirm data is available locally and visually once their etl applications finished running.

You will see something like this

LocalStack S3 Resources

LocalStack Dynamo Resources

You can download them here

Query Iceberg table

In JupyterLab:

Write some jupyter notebook code to inspect the iceberg table data. They will be saved under notebooks folder.

from pyspark.sql import SparkSession

# Initialize Spark session

spark = SparkSession.builder \

.appName("Silver Layer Job") \

.master("local[*]") \

.config("spark.sql.extensions", "org.apache.iceberg.spark.extensions.IcebergSparkSessionExtensions") \

.config("spark.sql.catalog.local_catalog", "org.apache.iceberg.spark.SparkCatalog") \

.config("spark.sql.catalog.local_catalog.type", "hadoop") \

.config("spark.sql.catalog.local_catalog.warehouse", "s3a://iceberg/warehouse") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.endpoint", "http://localstack:4566") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.access.key", "test") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.secret.key", "test") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.connection.ssl.enabled", "false") \

.config("spark.sql.catalog.local_catalog.hadoop.fs.s3a.path.style.access", "true") \

.getOrCreate()

df = spark.read.format("iceberg").load("local_catalog.local_catalog_plain.silver_table")

df.show(truncate=False)

print(f"Number of rows in the table: {df.count()}")Pro Tips

1. Increase Performance

Add to docker-compose.yml:

glue:

environment:

- SPARK_DRIVER_MEMORY=4g

- SPARK_EXECUTOR_MEMORY=4g2. Debug Spark Jobs

Access Spark UI at http://localhost:4040 while jobs are running.

Common Issues

LocalStack connection fails: Use service names (localstack, kafka) not localhost in container code.

Kafka not ready: Wait 30-60 seconds after docker-compose up before creating topics.

Memory errors: Increase Docker memory allocation to 8GB+ in settings.

Wrapping Up

This setup has transformed my development workflow. I can now:

- ✅ Test Glue jobs locally in seconds

- ✅ Debug streaming pipelines without AWS costs

- ✅ Experiment with Iceberg features safely

- ✅ Catch bugs before production deployment

The complete code is available in my docker_glue_pyspark_demo repository.

What’s Next?

Here are some potential enhancements this local setup can be extended:

- Add data quality checks with dbt/Great Expectations.

- Implement unit tests and extend tests coverage for these.

- Set up CI/CD pipelines using Github Actions for job deployment, unit test, peformance etc.

- Explore Iceberg’s time travel capabilities, etc

Feel free to fork the repo and adapt it to your needs.

Till next time, Happy coding!